2020. 2. 9. 02:53ㆍ카테고리 없음

Introduction What’s great about the Kafka Streams API is not just how fast your application can process data with it, but also how fast you can get up and running with your application in the first place—regardless of whether you are implementing your applications in Java or other JVM-based languages such as Scala and Clojure. Unlike competing technologies, Apache Kafka ® and its Streams API, and it is.

The latest Tweets from Mian Muhammad Asif Akhtar (@se_mianasif). Software Engineering Lead @tajawal. Dubai, United Arab Emirates. Optimize docker for mac nfs mount. Not on Twitter? Sign up, tune into the things you care about, and get updates as they happen.

In fact, it’s pretty common for our users to have their first application or proof-of-concept running in a matter of minutes. Some users, for example, opt to test-drive and develop their applications on their laptops against embedded, in-memory instances of Kafka and related services such as. And they also use the same setup for automated integration testing in CI environments backed by Jenkins or Travis CI. Our own uses exactly such a setup. Docker and Kafka Streams API: A Perfect Match Many developers love container technologies such as Docker and the to speed up the iterative development they’re doing on their laptops: for example, to quickly spin up a containerized deployment consisting of multiple services such as Apache Kafka,.

Additionally, Docker is also a very popular choice among Kafka users for containerizing and deploying applications and microservices on platforms such as Kubernetes or in the cloud. And yes, unlike related technologies such as Apache® Spark™ or Apache Flink®, where you must install and run special processing clusters into which you then submit cluster-specific “processing jobs,” you actually can containerize applications that use the Kafka Streams API because these are standard Java applications. (And as a side note, these applications are, making such deployment super-flexible to accommodate for independently working teams across a company.) This also means you are able to use the same organizational processes and technical tooling for development, testing, packaging, deployment, and monitoring of the Kafka Streams applications just like you do everywhere else inside your company.

For example, if you don’t like containers but prefer deploying to VMs with Puppet or Ansible, no problem. If you do like containers and enjoy deploying to Kubernetes or a cloud service like AWS EC2, no problem either. And—speaking of containers and Docker—this brings us to the focus of this blog.

To get started with the Kafka Streams API, most users typically begin with our or the in the Confluent documentation. In order to make your getting started experience even better, we recently added a new Docker-based demo setup. This Docker-based demo is the focus of this blog post and, because the demo is a one-click experience, the remainder of this post will be quite short and concise! Creating the Kafka Music Demo We will run the Confluent Kafka Music demo application in a containerized, multi-service deployment, using Docker. If you are reading this blog post for the first time, this will take you about five minutes.

Meabed On Twitter: Optimize Docker For Mac Mac

Afterward, this will take just a few seconds! Our Kafka Music application demonstrates how to build a music charts application that continuously computes, in real-time, the latest charts such as “Top 5 songs” per music genre. It exposes its latest Streams processing results—the latest music charts—through Kafka’s (see our ) combined with a REST API. The application’s input data is in Avro format and comes from two sources: a stream of play events (think: “song X was just played”) and a stream of song metadata (“song X was written by artist Y”).

The corresponding Avro schemas are registered with the Confluent Schema Registry instance because that’s how one. We will run the following containerized services:. The Confluent Kafka Music demo application ( ), with its REST API listening at port 7070/tcp.

A single-node Kafka cluster with a single-node ZooKeeper ensemble. If you first want to see a preview of what we will do in the subsequent sections, take a look at the following screencast: Prerequisite There is only one requirement to meet: you must install a recent version of and on your host machine (e.g., your laptop running Mac OS, Linux, or Windows) if you haven’t done so already. If you are on a Mac, follow the instructions at. The Confluent Docker images require Docker version 1.11 or greater. For reference, I have run the instructions in this blog on a MacBook Pro with Mac OS Sierra and the following Docker versions: Running the Kafka Music demo application The first step is to clone the Confluent Docker Images repository: Now we can launch the Kafka Music demo application including the services it depends on, such as Kafka: After a few seconds, the application and the services are up and running. One of the started containers is continuously generating input data for the application by writing into the application’s input topics.

This allows us to look at live, real-time data when using the Kafka Music application. Now we can use our web browser or a CLI tool such as curl to interactively query the latest processing results of the Kafka Music application by accessing its REST API. In other words, we can play around now! REST API example 1: list all running application instances of the Kafka Music application REST API example 2: get the latest Top 5 songs across all music genres The REST API exposed by the supports further operations. See the for details (link points to the sources for Confluent 3.2). If you’d like to continue exploring, perhaps by creating new Kafka topics or launching additional demonstrations, take a closer look at our. Once you’re done you can stop all the services and containers with: Conclusion and Wrapping Up What’s great about what we have just done is not the actual Kafka Music example — rather, it’s that you can do the very same for your own applications!

You can containerize your Kafka Streams application, similar to what we have done for the Kafka Music application above, and you can also deploy your application easily alongside other services such as an Apache Kafka cluster (with one or multiple brokers), and much more—including your own dockerized services. All you need is Docker and for Apache Kafka and friends. If you need an example or template for containerizing your Kafka Streams application, take a look at the we used for this blog post. Lastly, the image for running the Kafka Music demo application actually contains all of Confluent. This means you can easily run any of these applications, too.

I won’t cover that in this blog post, but we. Next Steps If you have enjoyed this article, you might want to continue with the following resources to learn more about Apache Kafka’s Streams API:. to build your own real-time applications and microservices.

Walk through our and play with our.

In this Docker Online Meetup, Product Managers Michael Chiang and Dave Tucker present an overview of Docker for Mac and Windows and then demo the beta. Docker for Mac and Docker for Windows are integrated, easy-to-deploy environments for building, assembling, and shipping applications from Mac or Windows. Docker for Mac and Windows contain many improvements over Docker Toolbox. Read this blog post for more information on the beta: Sign up for the beta here: https://beta.docker.com. Docker Online Meetup #36: Docker for Windows and Mac. 1. Docker for Mac and Windows Dave Tucker - dave.tucker@docker.com Michael Chiang - mchiang@docker.com.

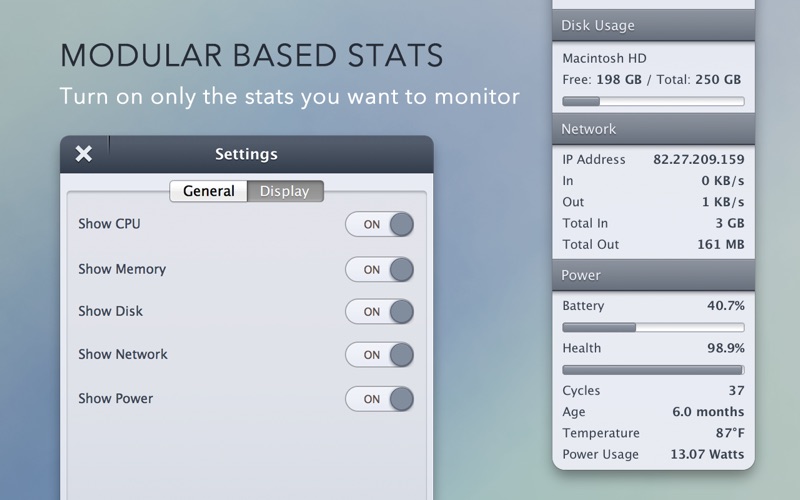

2 ● As a native Mac and Windows app, Docker can now be be installed, launched and utilized from a system toolbar like any other packaged app ● As with Docker for Linux, Docker for Mac and Windows brings a deeper integration with each of these platforms, leveraging the native virtualization features of respective platforms ○ Docker for Mac and Windows include deep system-level development that directly integrates Docker with host-native virtualization (e.g. Apple Hypervisor framework and Microsoft Hyper-V), networking, filesystems and security capabilities ○ These integrated products include Docker Compose and Notary and offer a streamlined installation process that no longer requires non-system third-party software like VirtualBox ○ This results in significantly faster performance and improves the user experience in terms of developer workflow and file synchronization for editing and testing code.